#LLM

Research

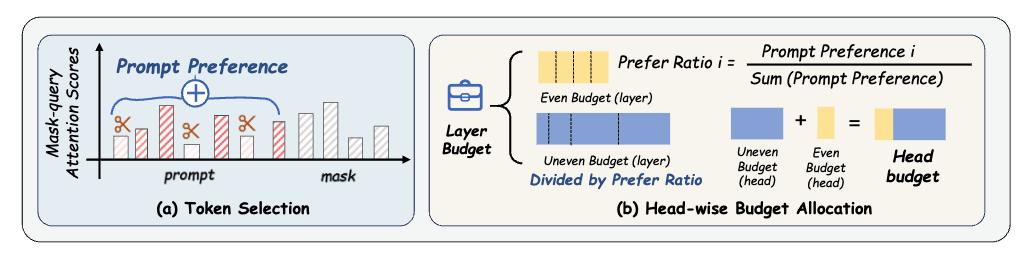

Mask Tokens as Prophet: Fine-Grained Cache Eviction for Efficient dLLM Inference

Jianuo Huang,

Yaojie Zhang,

Yicun Yang,

Benhao Huang,

Biqing Qi,

Dongrui Liu,

Linfeng Zhang

Oct 10th 2025

MaskKV exploits mask-token attention signals to evict low-utility KV pairs in diffusion LLMs, shrinking cache budgets while preserving long-context accuracy and increasing throughput.

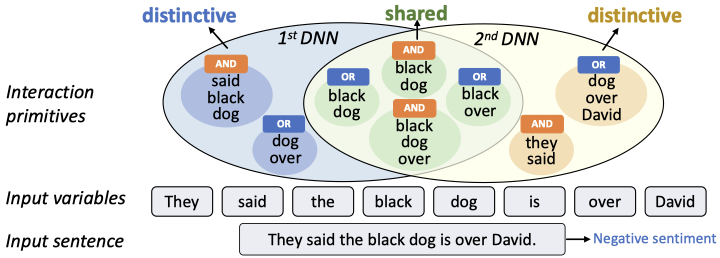

Defining and extracting generalizable interaction primitives from DNNs

Lu Chen,

Siyu Lou,

Benhao Huang,

Quanshi Zhang

Given different DNNs trained for the same task, developed a new method to extract interactions that are shared by these DNNs. Experiments show that the extracted interactions can better reflect common ...