Research

2025

PAN: A World Model for General, Interactable, and Long-Horizon World Simulation

PAN brings imagination to life — fusing language, action, and vision to simulate the world's evolution with stunning realism and consistency.

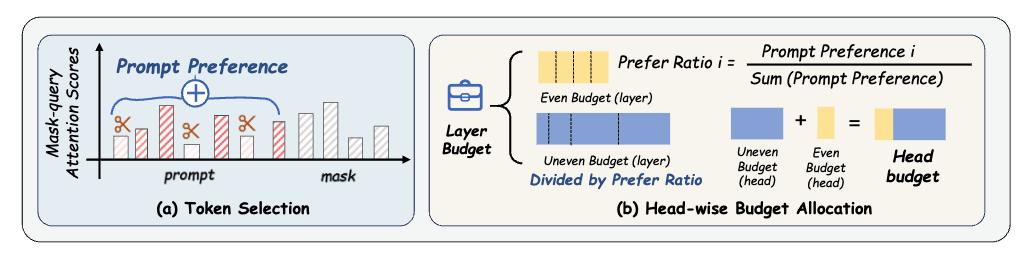

Mask Tokens as Prophet: Fine-Grained Cache Eviction for Efficient dLLM Inference

MaskKV exploits mask-token attention signals to evict low-utility KV pairs in diffusion LLMs, shrinking cache budgets while preserving long-context accuracy and increasing throughput.

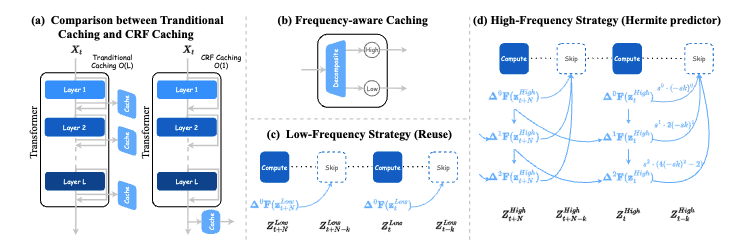

FreqCa: Accelerating Diffusion Models via Frequency-Aware Caching

FreqCa accelerates diffusion models by analyzing frequency dynamics of features across timesteps, reusing low-frequency components and interpolating high-frequency ones with great memory reduction.

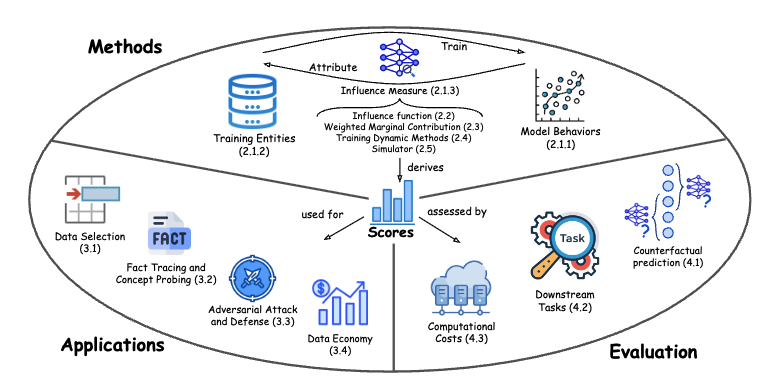

A Survey of Data Attribution: Methods, Applications, and Evaluation in the Era of Generative AI

A comprehensive survey on data attribution methods, applications, and evaluation protocols that address how training data influences the behaviors of generative AI systems.

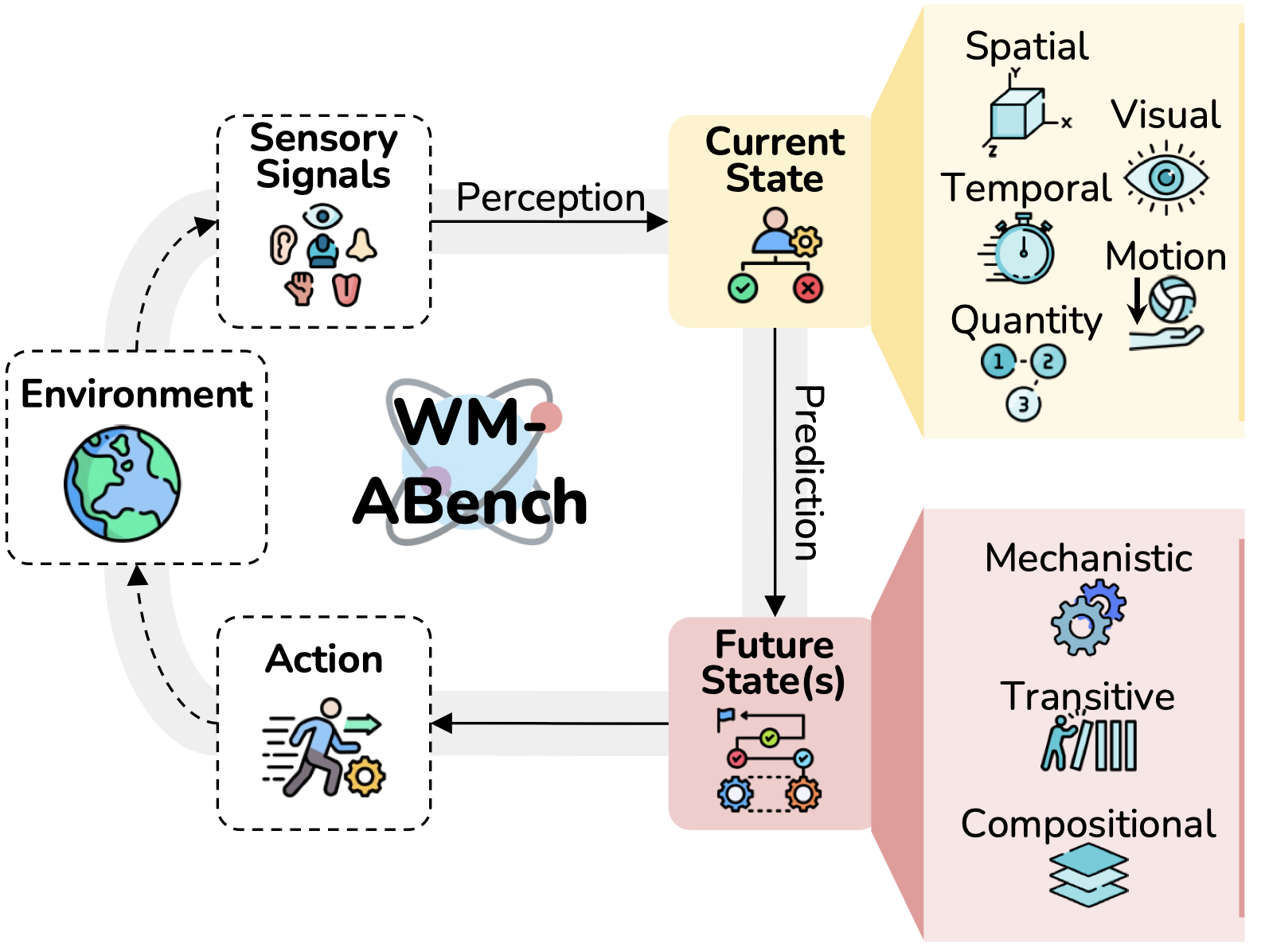

Do Vision-Language Models Have Internal World Models? Towards an Atomic Evaluation

This paper evaluates whether modern Vision-Language Models (VLMs) like GPT-4o and Gemini can act as internal world models (WMs)—systems that understand and predict the world.

2024

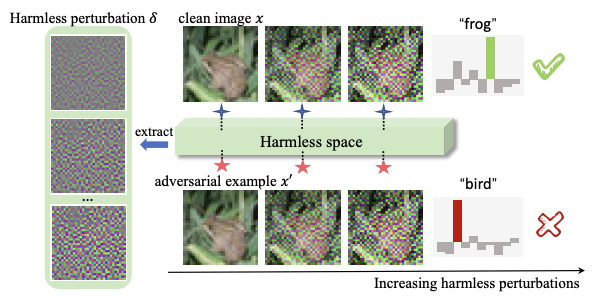

Contrasting Adversarial Perturbations: The Space of Harmless Perturbations

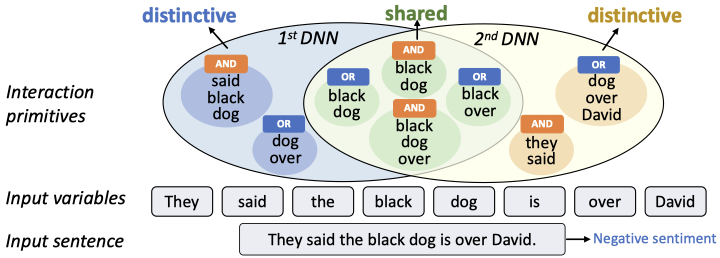

Defining and extracting generalizable interaction primitives from DNNs

Given different DNNs trained for the same task, developed a new method to extract interactions that are shared by these DNNs. Experiments show that the extracted interactions can better reflect common ...